Batchactive Scheduling:

Cluster Scheduling for Explicitly-speculative Tasks

David Petrou - Carnegie Mellon University, Dept. ECE Ph.D. Dissertation CMU-PDL-04-112. December 2004.

A process scheduler on a shared cluster, grid, or supercomputer that is informed which submitted tasks are possibly unneeded speculative tasks can use this knowledge to better support increasingly prevalent user work habits, lowering user-visible response time, lowering user costs, and increasing resource provider revenue.

|

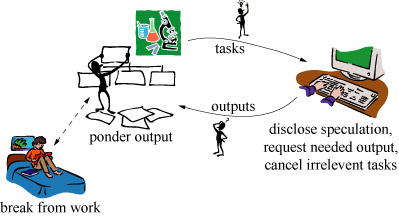

Figure 1: Users wish to pipeline the execution of chains of speculative — not known to be needed — tasks with the consideration of received task outputs and optional rest periods. This project removes existing barriers to exploiting this way of working. |

Large-scale computing often consists of many speculative tasks (tasks that may be canceled) to test hypotheses, search for insights, and review potentially finished products. For example, speculative tasks are issued by bioinformaticists comparing DNA sequences, computer graphics artists rendering scenes, and computer researchers studying caching. This behavior — exploratory searches and parameter studies, made more common by the cost effectiveness of cluster computing — on existing schedulers without speculative task support results in a mismatch of goals and suboptimal scheduling. Users wish to reduce their time waiting for needed task output and the amount they will be charged for unneeded speculation, making it unclear to the user how many speculative tasks they should submit.

This project introduces 'batchactive' scheduling (combining batch and interactive characteristics) to exploit the inherent speculation in common application scenarios. With a batchactive scheduler, users submit explicitly labeled batches of speculative tasks exploring ambitious lines of inquiry, and users interactively request task outputs when these outputs are found to be needed. After receiving and considering an output for some time, a user decides whether to request more outputs, cancel tasks, or disclose new speculative tasks. Users are encouraged to disclose more computation because batchactive scheduling intelligently prioritizes among speculative and non-speculative tasks, providing good wait-time-based metrics, and because batchactive scheduling employs an incentive pricing mechanism which charges for only requested task outputs (i.e., unneeded speculative tasks are not charged), providing better cost-based metrics for users. These aspects can lead to higher billed server utilization, encouraging batchactive adoption by resource providers organized as either cost- or profit-centers.

Not all tasks are equal — only tasks whose outputs users eventually desire matter — leading me to introduce the 'visible response time' metric which accrues for each task in a batch of potentially speculative tasks when the user needs its output, not when the entire batch was submitted, and the batchactive pricing mechanism of charging for only needed tasks, which encourages users to disclosure future work while remaining resilient to abuse. I argue that the existence of user think times, user away periods, and server idle time makes batchactive scheduling applicable to today’s systems.

I study the behavior of speculative and non-speculative scheduling using a highly-parameterizable discrete-event simulator of user and task behavior based on important application scenarios. I contribute this simulator to the community for further scheduling research.

|

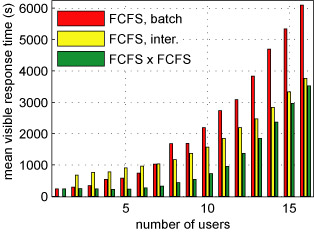

| Figure 2: How the number of users affects mean visible response time (lower is better). With few users, batch FCFS is better than interactive FCFS; with many, interactive FCFS is better than batch FCFS. Batchactive FCFS x FCFS adapts and always performs best. |

For example, over a broad range of realistic simulated user behavior and task characteristics, I show that under a batchactive scheduler visible response time is improved by at least a factor of two for 20% of the simulations. A batchactive scheduler which favors users who historically have desired a greater fraction of tasks that they speculatively disclosed provides additional performance and is resilient to a denial-of-service. Another result is that visible response time can be improved while increasing the throughput of tasks whose outputs were desired. Under some situations, user costs decrease while server revenue increases. A related result is that more users can be supported and greater server revenue generated while achieving the same mean visible response time. A comparison against an impractical batchactive scheduler shows that the easily implementable two tiered batchactive schedulers, out of all batchactive schedulers, provide most of the potential performance gains. Finally, I demonstrate deployment feasibility by describing how to integrate a batchactive scheduler with a popular clustering system.

Publications

- Scheduling Speculative Tasks in a Compute Farm. David Petrou, Garth A. Gibson, Gregory R. Ganger. Proceedings of the ACM/IEEE Supercomputing 2005 Conference, Seattle, Washington, November, 2005.

Abstract / PDF [ 569K]

- Cluster Scheduling for Explicitly-speculative Tasks. David Petrou. Carnegie Mellon University Ph.D Dissertation. CMU-PDL-04-112, December 2004.

Abstract / PDF [4.2M]

- Cluster Scheduling for Explicitly-Speculative Tasks. David Petrou, Gregory R. Ganger, Garth A. Gibson. Proceedings of the 18th Annual ACM International Conference on Supercomputing (ICS’04), June 26-July 1, 2004, Malo, France.

Abstract / PDF [443K]

- Scheduling Explicitly-speculative Tasks. David Petrou, Gregory R. Ganger, Garth A. Gibson. Carnegie Mellon University Technical Report CMU-CS-03-204, November 2003.

Abstract / Postscript [2.0M] / PDF [400K]

Simulator Code

- Batchactive Scheduler simulator

Readme / Simulator code [180K]

Acknowledgements

We thank the members and companies of the PDL Consortium: Amazon, Google, Hitachi Ltd., Honda, Intel Corporation, IBM, Meta, Microsoft Research, Oracle Corporation, Pure Storage, Salesforce, Samsung Semiconductor Inc., Two Sigma, and Western Digital for their interest, insights, feedback, and support.