NASD: Enabling Technologies

Storage architecture is ready to change as a result of the synergy between five overriding factors:

- I/O bound applications,

- new drive attachment technologies,

- an excess of on-drive transistors,

- the convergence of peripheral and interprocessor switched networks, and

- the cost of storage

systems.

I/O-bound Applications

Traditional distributed filesystem workloads are dominated by small random accesses to small files whose sizes are slowly growing with time. In contrast, new workloads are much more I/O-bound, including data types such as video and audio, and applications such as data mining of retail transactions, medical records, or telecommunication call records.

New Drive Attachment Technology

Disk bandwidth is increasing at rate

of 40% per year. High transfer rates have increased pressure on the physical

and electrical design of drive busses, dramatically reducing maximum bus

length. At the same time, people are building systems of clustered computers

with shared storage. Therefore, the storage industry is beginning to encapsulate

drive communication over Fibrechannel, a serial, switched, packet-based

peripheral network that supports long cable lengths, more ports, and more

bandwidth. NASD will evolve the SCSI command set that is currently being

encapsulated over Fibrechannel to take full advantage of the switched-network

technology for both higher bandwidth and increased flexibility.

Excess of On-drive Transistors

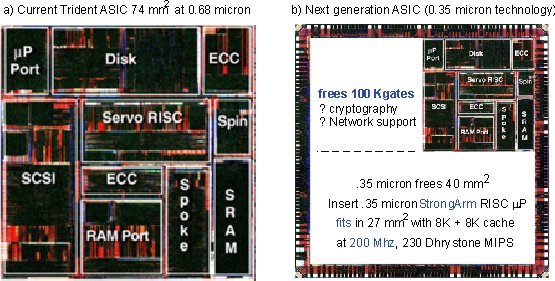

The increasing transistor density in inexpensive ASIC technology has lowered disk drive cost and increased performance by integrating sophisticated special-purpose functional units into a small number of chips. Figure 1 shows the block diagram for the ASIC at the heart of Quantum’s Trident drive. When drive ASIC technology advances from 0.68 micron CMOS to 0.35 micron CMOS, they will be able to insert a 200 MHz StrongARM microcontroller, leaving space equivalent to 100,000 gates for functions such as onchip DRAM or cryptographic support. While this may seem like a major jump, Siemen’s TriCore integrated microcontroller and ASIC architecture promises to deliver a 100 MHz, 3-way issue, 32-bit datapath with up to 2 MB of onchip DRAM and customer defined logic in 1998.

Figure 1: Quantum’s Trident disk drive features the ASIC on the left (a). Integrated onto this chip in four independent clock domains are 10 function units with a total of about 110,000 logic gates and a 3 KB SRAM: a disk formatter, a SCSI controller, ECC detection, ECC correction, spindle motor control, a servo signal processor and its SRAM, a servo data formatter (spoke), a DRAM controller, and a microprocessor port connected to a Motorola 68000 class processor. By advancing to the next higher ASIC density, this same die area could also accommodate a 200 MHz StrongARM microcontroller and still have space left over for DRAM or additional functional units such as cryptographic or network accelerators.

Convergence of Peripheral and Interprocessor Networks

Scalable computing is increasingly based on clusters of workstations. In contrast to the special-purpose, highly reliable, low-latency interconnects of massively parallel processors such as the SP2, Paragon, and Cosmic Cube, clusters typically use Internet protocols over commodity LAN routers and switches. To make clusters effective, low-latency network protocols and user-level access to network adapters have been proposed, and a new adapter card interface, the Virtual Interface Architecture, is being standardized. These developments continue to narrow the gap between the channel properties of peripheral interconnects and the network properties of client interconnects and make Fibrechannel and Gigabit Ethernet look more alike than different.

Cost-ineffective Storage Servers

In high performance distributed filesystems, there is a high cost overhead associated with the server machine that manages filesystem semantics and bridges traffic between the storage network and the client network. Figure 2 illustrates this problem for bandwidth-intensive applications. Based on cost and peak performance estimates, we compare the expected overhead cost of a storage server as a fraction of the raw storage cost. Servers built from high-end components have an overhead that starts at 1,300% for one server-attached disk! Assuming dual 64-bit PCI busses that deliver every byte into and out of memory once, the high-end server saturates with 14 disks, 2 network interfaces, and 4 disk interfaces with a 115% overhead cost. The low cost server is more cost effective. One disk suffers a 380% cost overhead and, with a 32-bit PCI bus limit, a six disk system still suffers an 80% cost overhead. While we can not accurately anticipate the marginal increase in the cost of a NASD over current disks, we estimate that the disk industry would be happy to charge 10% more. This bound would mean a reduction in server overhead costs of at least a factor of 10 and in total storage system cost (neglecting the network infrastructure) of over 50%.

Figure 2: Cost model for the traditional server architecture. In this simple model, a machine serves a set of disks to clients using a set of disk (wide Ultra and Ultra2 SCSI) and network (Fast and Gigabit Ethernet) interfaces. Using peak bandwidths and neglecting host CPU and memory bottlenecks, we estimate the server cost overhead at maximum bandwidth as the sum of the machine cost and the costs of sufficient numbers of interfaces to transfer the disks’ aggregate bandwidth divided by the total cost of the disks. While the prices are probably already out of date, the basic problem of a high server overhead is likely to remain. We report pairs of costs and bandwidth estimates. On the left, we show values for a low cost system built from high-volume components. On the right, we show values for a high-performance reliable system built from components recommended for mid-range and enterprise servers.