dbug: Systematic and Scalable Testing of Concurrent Systems

To improve quality of an implementation of a distributed and/or multi-threaded system, software engineers inspect code and run tests. However, the concurrent nature of such systems makes these tasks challenging. While the effectiveness of code inspection varies with the ability of a software engineer to fathom possible scenarios in which threads could interact, in testing, repeated execution of the same test can have different outcomes. In practice, the concurrent nature of distributed and multi-threaded systems is commonly addressed by stress testing, which repeatedly executes a test hoping that eventually all interesting outcomes of the test will be exercised.

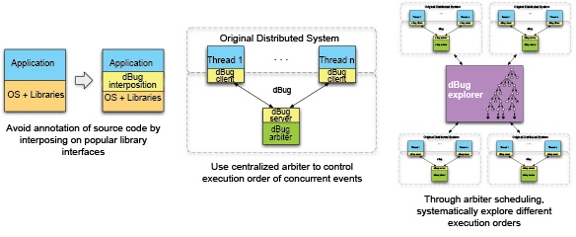

This project explores an alternative to stress testing called systematic testing, which controls the order in which important concurrent events occur. By doing so, the method can systematically enumerate different scenarios in which important concurrents events may execute. Our systematic testing method is implemented as part of the dbug tool, which enables systematic testing of unmodified distributed and multi-threaded systems designed for POSIX-compliant operating systems.

The dbug tool can be thought of as a light-weight model checker, which uses both the implementation of a distributed and multi-threaded system and its test as an implicit description of the state space to be explored. In this state space, the dbug tool performs a reachability analysis checking for a number of safety properties including the absence of 1) deadlocks, 2) conflicting non-reentrant function calls, and 3) system aborts and runtime assertions inserted by the user.

The systematic testing approach of the dbug tool has been successfully applied to a number of distributed and multi-threaded systems including: 1) Parallel Virtual File System, 2) a distributed key-value storage for FAWN, 3) student implementations of web server proxies from the 15-213 course, and 4) flexible transactional storage Stasis.

|

dBug Approach: Design & Implementation |

People

FACULTY

GRAD STUDENTS

Publications

- Runtime Estimation and Resource Allocation for

Concurrency Testing.

Jiri Simsa, Randy Bryant, Garth Gibson.

Carnegie Mellon University Parallel Data Lab Technical Report CMU-PDL-12-113.

December 2012.

Abstract / PDF [490K]

- Scalable Dynamic Partial Order Reduction. Jiri Simsa, Randy Bryant, Garth Gibson, Jason Hickey. Third Int. Conf. on Runtime Verification (RV2012), 25-28 September 2012, Istanbul, Turkey.

Abstract / PDF [331K]

- Concurrent Systematic Testing at Scale. Jiri Simsa, Randy Bryant, Garth Gibson, Jason Hickey. Carnegie Mellon University Parallel Data Lab Technical Report CMU-PDL-12-101. May 2012.

Abstract / PDF [397K]

- dBug: Systematic Testing of Distributed and Multi-threaded Systems. Jiri Simsa, Randy Bryant, Garth Gibson.18th International Workshop on Model Checking of Software (SPIN'11), Snowbird UT, July 2011.

Abstract / PDF [149K]

- dBug: Systematic Evaluation of Distributed Systems. Jiri Simsa, Randy Bryant, Garth Gibson. 5th Int. Workshop on Systems Software Verification (SSV’10), co-located with 9th USENIX Symp. on Operating Systems Design and Implementation (OSDI’10), Vancouver BC, October 2010.

Abstract / PDF [168K]

Downloads

- Latest dbug distribution (0.4.0-beta) - TAR.GZ

- A patched version of a library referred to in dbug's installation instructions: TAR.GZ

Acknowledgements

The work in this project is based on research supported in part by the DoE, under award number DE-FC02-06ER25767 (PDSI), by the NSF under grant CCF-1019104, and by the MSR-CMU Center for Computational Thinking.

We thank the members and companies of the PDL Consortium: Amazon, Google, Hitachi Ltd., Honda, Intel Corporation, IBM, Jane Street, Meta, Microsoft Research, Oracle Corporation, Pure Storage, Salesforce, Samsung Semiconductor Inc., Two Sigma, and Western Digital for their interest, insights, feedback, and support.